Hippo is an opensource content management system. Hippo has created a cms on top of the Jackrabbit repository. Hippo is not the only cms that does this trick, but it is a big one. With version 7 out there, you can now use some nice features that they have created. This post discusses one of these features in detail, Workflow. I’ll explain what it is, what is can be used for and how you can extend it with business events.

Hippo is an opensource content management system. Hippo has created a cms on top of the Jackrabbit repository. Hippo is not the only cms that does this trick, but it is a big one. With version 7 out there, you can now use some nice features that they have created. This post discusses one of these features in detail, Workflow. I’ll explain what it is, what is can be used for and how you can extend it with business events.

I do want to stress that this is an idea I have about how this can be implemented. I did try it out, but do not consider this as a best practise (yet). See it as inspiration to your own extensions and maybe Hippo will contain something like this in the future.

The idea behind this post came from talks with Allard and Jeroen Reijn, who will write a blog post about a specific part of the solution as I will describe in the post later on.

The problem

Let me start by describing the reason why I wanted a solution like this. For a project we are doing, we want the content of the repository to be available in a Solr instance. Yes I know Hippo comes with Lucene out of the box, but we have have chosen to use solr. The reason behind this is not important for the scope of this blog post. Because of this we need to have information about content in the repository. Of course there are multiple mechanisms available to do this. I have blogged before about repository listeners. There is a big disadvantage with these listeners, they are not part of the repository. They are part of a client that connect to the repository. These listeners are also very low level, they respond to nodes being added or changed. We are more interested in the business events that take place. An example would be the publication of a document. We want to integrate with the workflow in the cms/repository.

Jeroen showed me a way this could be done with hippo 7 using a Daemon module.

The design

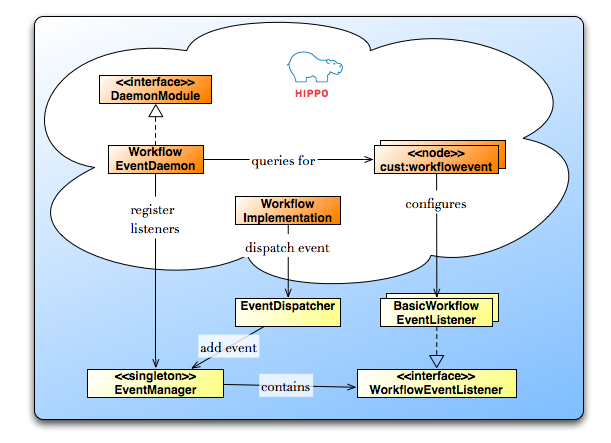

The following image gives an overview of the design for the solution.

Let’s start with the WorkflowEventDaemon, this is a class that get’s instantiated by hippo during initialization of the repository. This daemon queries the repository for nodes of type cust:workflowevent. These nodes contain a reference to the implemented EventListeners. The daemon uses these nodes to register event listeners with the EventManager. Next up are the workflow implementations. Currently the provided workflows do not raise events. Luckily it is fairly easy to extend these workflows so they do create the events. The workflow implementations use EventDispatcher instances to actually send an event to the EventManager. Using these events, the EventManager will call the listeners.

That is the basic idea about the solution, let’s focus on the implementation now.

The implementation

Node type defintion

First we need to tell the repository about the new type. This is done using CND file. The file looks like this.

<'cust'='https://www.gridshore.nl/cust/1.0'> <'hippostd'='http://www.hippoecm.org/hippostd/nt/1.2'> <'hippo'='http://www.hippoecm.org/nt/1.2'> [cust:workflowevent] - cust:class (String)

Hippo has created a mechanism to load nodes into the repository during startup. If you provide a file with the name hippoecm-extension.xml, hippo picks it up and puts these nodes in the repository. To load the CND file we add the following configuration

<?xml version="1.0" encoding="UTF-8"?>

<sv:node xmlns:sv="http://www.jcp.org/jcr/sv/1.0" sv:name="hippo:initialize">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>hippo:initializefolder</sv:value>

</sv:property>

<sv:node sv:name="cust">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>hippo:initializeitem</sv:value>

</sv:property>

<sv:property sv:name="hippo:sequence" sv:type="Double">

<sv:value>10009</sv:value>

</sv:property>

<sv:property sv:name="hippo:namespace" sv:type="String">

<sv:value>https://www.gridshore.nl/cust/1.0</sv:value>

</sv:property>

<sv:property sv:name="hippo:nodetypesresource" sv:type="String">

<sv:value>cust-types.cnd</sv:value>

</sv:property>

</sv:node>

</sv:node>

WorkflowEventListener

If you are interested in WorkflowEvents, you need to register a listener. Configuration of these listeners is done in the repository. We can add a node during startup. This is done by adding the following configuration to the hippo-extension.xml file.

<sv:node sv:name="content-workflowevents">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>hippo:initializeitem</sv:value>

</sv:property>

<sv:property sv:name="hippo:sequence" sv:type="Double">

<sv:value>10500</sv:value>

</sv:property>

<sv:property sv:name="hippo:contentresource" sv:type="String">

<sv:value>workflowevents.xml</sv:value>

</sv:property>

<sv:property sv:name="hippo:contentroot" sv:type="String">

<sv:value>/content</sv:value>

</sv:property>

</sv:node>

This references the workflowsevents.xml file. The following code shows this file that actually contains a node of type cust:workflowevent containing a reference to a very easy Logging implementation of the WorkflowEventListener interface

<?xml version="1.0" encoding="UTF-8"?>

<sv:node sv:name="workflowevents" xmlns:sv="http://www.jcp.org/jcr/sv/1.0">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>hippostd:folder</sv:value>

</sv:property>

<sv:property sv:name="jcr:mixinTypes" sv:type="Name">

<sv:value>hippo:harddocument</sv:value>

</sv:property>

<sv:node sv:name="Logging Listener">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>cust:workflowevent</sv:value>

</sv:property>

<sv:property sv:name="cust:class" sv:type="String">

<sv:value>nl.gridshore.addons.events.impl.LoggerWorkflowEventListener</sv:value>

</sv:property>

</sv:node>

</sv:node>

Now we have the nodes in the repository that daemon queries for. So let’s have a look at the daemon.

The daemon

Now we have come to the part where Jeroen helped me out. It turns out that hippo looks on it’s classpath for all MANIFEST.MF files. If such a file contains an entry for the property Hippo-Modules, hippo expects a space delimited number of classes that are implementations of the DeamonModule interface. If you are using maven, this is very easy to accomplish using the jar plugin.

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<configuration>

<archive>

<manifestEntries>

<Hippo-Modules>nl.gridshore.hippo.addons.deamon.WorkflowEventDeamon</Hippo-Modules>

</manifestEntries>

</archive>

</configuration>

</plugin>

The daemon itself contains three parts

- Query the repository for registered WorkflowEventListeners

- Instantiate each listener using reflection

- Register each listener with the EventManager

The following code block shows the complete implementation of the daemon. I hope the code is clear enough.

public class WorkflowEventDeamon implements DaemonModule {

private Session session;

public void initialize(Session session) throws RepositoryException {

this.session = session;

NodeIterator nodes = obtainRegisteredWorkflowEventListeners(session);

while (nodes.hasNext()) {

Node node = nodes.nextNode();

Property property = node.getProperty("cust:class");

WorkflowEventListener listener = constructListener(property.getString());

if (listener != null) {

EventManager.getInstance().addWorkflowEventListener(listener);

}

}

}

private NodeIterator obtainRegisteredWorkflowEventListeners(Session session) throws RepositoryException {

QueryManager queryManager = session.getWorkspace().getQueryManager();

Query query = queryManager.createQuery("select * from cust:workflowevent", Query.SQL);

QueryResult queryResult = query.execute();

return queryResult.getNodes();

}

private WorkflowEventListener constructListener(String className) {

WorkflowEventListener listener = null;

try {

Class clazz = this.getClass().getClassLoader().loadClass(className);

listener = (WorkflowEventListener) clazz.getConstructor().newInstance();

} catch (ClassNotFoundException e) {

logger.error("FATAL error when loading listener class : class not found",e);

} catch (NoSuchMethodException e) {

logger.error("FATAL error when loading listener class : no such method",e);

} catch (IllegalAccessException e) {

logger.error("FATAL error when loading listener class : illegal access",e);

} catch (InvocationTargetException e) {

logger.error("FATAL error when loading listener class : invocation target",e);

} catch (InstantiationException e) {

logger.error("FATAL error when loading listener class : instantiation",e);

}

return listener;

}

public void shutdown() {

session.logout();

}

}

The EventManager

The implementation of the EventManager is pretty straightforward. It contains a method to register listeners and a method to add an event. The following code block shows the implementation.

public class EventManager {

private final static EventManager eventManager = new EventManager();

private final Set<WorkflowEventListener> eventListeners = Collections.synchronizedSet(new HashSet<WorkflowEventListener>());

private EventManager() {}

public void addEvent(WorkflowEvent workflowEvent) {

for (WorkflowEventListener workflowEventListener : eventListeners) {

workflowEventListener.handleEvent(workflowEvent);

}

}

public void addWorkflowEventListener(WorkflowEventListener workflowEventListener) {

eventListeners.add(workflowEventListener);

}

public static EventManager getInstance() {

return eventManager;

}

}

Dispatching events

I already mentioned I want to dispatch events from workflow components. It is a bit out of scope of this blog item to discuss workflows in Hippo very detailed. To be honest, I would not be the right person to do that. Still I want to give you a very short introduction.

The default workflow for promoting content from draft into publication is the Reviewed actions workflow. This workflow is provided in the default installation. Workflow items in here define what needs to happen when an author opens a document in edit mode or when an editor can do. These kind of workflow items are initiated by the frontend plugins. These plugins are wicket components that put a save and cancel button on the screen. In this blog item I’ll describe the way to extend such a workflow and how to configure the repository/cms to use our custom workflow item.

Let’s start with the custom workflow item

public class CustomBasicReviewedActionsWorkflowImpl extends BasicReviewedActionsWorkflowImpl

implements BasicReviewedActionsWorkflow {

public CustomBasicReviewedActionsWorkflowImpl() throws RemoteException {

super();

}

public void publish(Date date) throws WorkflowException, MappingException, RepositoryException, RemoteException {

super.publish(date);

EventManager.getInstance().addEvent(new WorkflowEvent("This is my first workflow event from the publish"));

}

public void commitEditableInstance() throws WorkflowException {

super.commitEditableInstance();

EventManager.getInstance().addEvent(new WorkflowEvent("This is my first workflow event from the commit editable instance"));

}

}

The workflow items are configured in the repository in the following path

/hippo:configuration/hippo:workflows/. For the specific BasicReviewedActionsWorkflow we need to change two locations. In the following two locations we need to change the property hippo:classname:

- /hippo:configuration/hippo:workflows/default/authorreviewedactions

- /hippo:configuration/hippo:workflows/editing/reviewedactions

There is one thing left to do, hippo uses jpox to enrich the worklfow. This enriching needs to be done using the jpox maven plugin. The following code block gives an example:

<plugin>

<groupId>jpox</groupId>

<artifactId>jpox-maven-plugin</artifactId>

<version>1.2.0-beta-2</version>

<configuration>

<verbose>false</verbose>

</configuration>

<executions>

<execution>

<phase>compile</phase>

<goals>

<goal>enhance</goal>

</goals>

</execution>

</executions>

</plugin>

That is about it, if you fire up your repository and cms, and you change the properties as mentioned above, the workflow items should be sending events when someone saves and closes a document.

Hope this helped in you exploration of hippo workflows.

References

- http://www.onehippo.org/cms7//delve_into/custom/reference/repository/workflow.html

- Blog Jeroen for more information about the DaemonModule